Why and How to Disclose the Use of AI

With the emergence of generative AI, it now takes just a few button-clicks for anyone to create or manipulate data and convince others that fake content is real.

This newfound ability is eroding digital trust at an alarming rate. Indeed, it threatens to undermine society’s most critical decision-making tools: the evidence of our eyes and ears. It’s already affecting America’s electoral process. An Oct. 2024 McAfee survey found that 63% of Americans said they’ve seen a deepfake image or video in the past 60 days, and nearly half of the people who’ve seen one said it’s influenced who they’ll vote for in the Nov. 2024 election.

Satire and parody are nothing new in politics. But up until now most people have been able to discern the difference between a satirical illustration and actual photographic evidence. AI images are so sophisticated that we are quickly losing that ability.

That’s led many concerned leaders and organizations, including the Transparency Coalition, to call for legislation that requires the disclosure of AI use in digital content.

California recently passed SB 942, the first statewide AI notification standard, and we expect similar proposals to be introduced in state legislatures in early 2025.

SB 942, the California AI Transparency Act, requires AI developers to embed detection tools in the media their AI creates, and to post an AI decoder tool on their website. The decoder tool would allow consumers to upload digital media and discover whether the developer’s AI was used to create that content.

How provenance makes disclosure possible

How is that done, exactly? Disclosing the use of AI to create or modify a digital object is possible because of provenance.

Data provenance refers to a documented trail that tracks the origin, change, and historical record of data and digital content, allowing users to understand and verify where the data/content came from, how it’s transformed, and by whom. It’s similar in concept to the provenance documents used by buyers and sellers to authenticate works of fine art.

In the AI world, provenance applies both to the AI system’s inputs (the training data) and its outputs (most commonly, a generative AI image or video).

Inputs: Provenance guarantees that data brokers are transparent about their datasets and provides a chain of information where data can be tracked as researchers use other researchers’ data and adapt it for their own purposes.

Outputs: Provenance offers a mechanism to disclose the use of AI to create or modify a piece of content. This is increasingly important in a world where political campaign materials are infused with AI-generated images, and the authenticity of evidence becomes more difficult to determine every day.

Provenance lives in the metadata

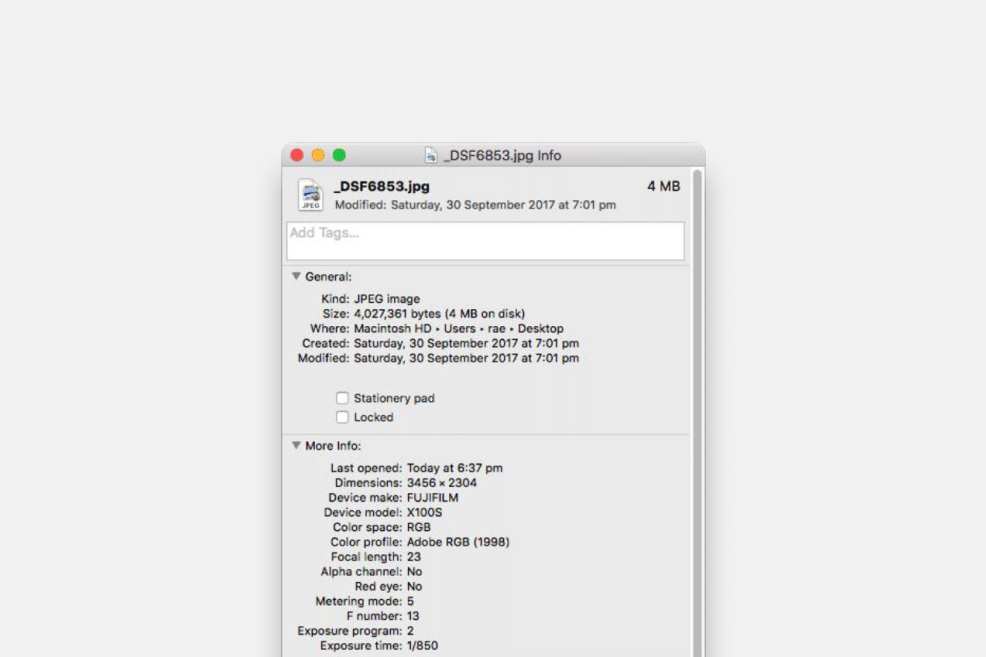

Metadata is information contained within the code of a digital object. We don’t see it, but it exists in the background like a hidden caption. Many people are familiar with the metadata attached to digital photographs. If you right-click an image and select “Get Info,” you can see all kinds of details, as in this example:

When we talk about provenance, we’re talking about similar metadata embedded within the code of a dataset or a digital object. Although the analogy isn’t perfect, it’s kind of a digital serial number.

Recording and preserving provenance data for AI-generated content requires a combination of technical and social solutions that can ensure that provenance data is accurate, complete, consistent, accessible, and secure.

other forms of provenance

Other techniques may be used to embed provenance in an AI-created image or video, including:

Watermarks: These are visible or invisible marks added to digital media files to indicate their origin or ownership. Watermarks can be textual or graphical symbols that are embedded in images, videos, or audio files. Most people are familiar with the well-known Getty Images watermark, seen below, which allows users to view the image while preserving Getty’s copyright protections.

Getty Images has created one of the world’s most well-known watermarks.

Digital Signatures: Digital signatures use public-key encryption to generate a unique code that is attached to a digital media file. The code can be verified by anyone who has access to the public key of the signer.

Blockchain: Blockchain can be used to create and manage digital assets on the web, such as cryptocurrencies, tokens, smart contracts, etc. Blockchain can also be used to record and preserve provenance data for AI-generated content, such as source, creation process, ownership, and distribution.

The need for digital provenance tools has sparked the creation of a niche industry, with start-up companies like Steg.AI, Numbers Protocol, the Content Blockchain Project, and the Starling Lab for Data Integrity creating cryptographic methods and web protocols to establish authenticity in the digital space.

Next: